Images Are Matrices #

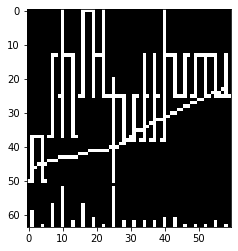

A digital image is nothing else but a matrix; each pixel is an entry of the matrix. For example, we can represent the following 20-day OHLC Image from Jiang, Kelly and Xiu (2022+)

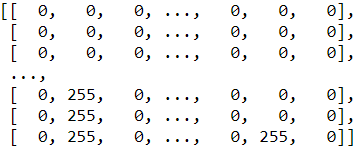

by a 64x60 matrix given by

where 255 means a white pixel and 0 means a black one.

In general, we can represent any $\mathrm{I}\times \mathrm{J}$ grayscale image as a matrix, say

$$\boldsymbol{V}=\{V_{\mathrm{i},\mathrm{j}}:\mathrm{i}=1,\ldots,\mathrm{I}, ~\mathrm{j}=1,\ldots,\mathrm{J}\}$$

where $V_{\mathrm{i},\mathrm{j}}$ indicates the grayscale of a pixel at the location $(\mathrm{i},\mathrm{j})$.

Flattening #

One can convert a matrix into a vector or vice versa (given the size of the matrices). This process of converting multiple grids into a vector is called flattening in machine learning or vectorization in mathematics.

Vectorizing $\boldsymbol{V}$ yields a $\mathrm{I}\times \mathrm{J}$ dimensional vector

$$\operatorname{vec}(\boldsymbol{V})=\begin{pmatrix}V_{1,1}\\\vdots\\V_{\mathrm{I},1}\\V_{1,2}\\\vdots\\V_{\mathrm{I},2}\\\vdots\\V_{1,\mathrm{J}}\\\vdots\\V_{\mathrm{I},\mathrm{J}}\end{pmatrix}.$$

We may input the flattened feature vector directly to a neural network by treating every entry as a feature for prediction. This approach is, however, not favorable because it ignores the spatial structure of the original matrices. The location information of the pixels are lost during the flattening process. To exploit the spatial information, we should use convolutional neural networks.